100th Anniversary of our Institute

Our lab is part of the Chair of Geoinformation Engineering at ETH Zurich, and therefore also part of the Institute of Cartography and Geoinformation, which was celebrating its 100th anniversary with a large symposium on 4-5 September 2025.

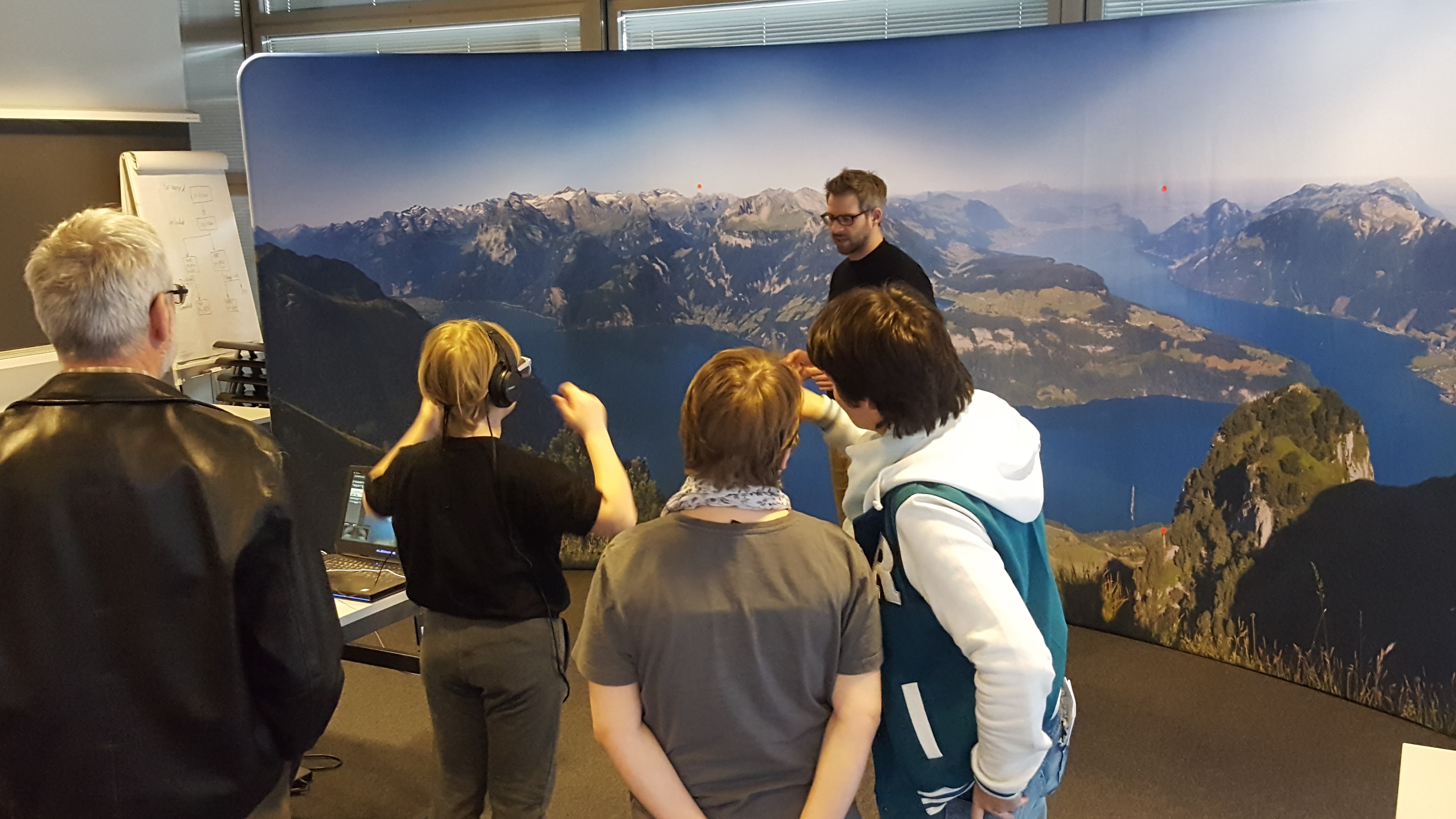

We feel honored being part of this prestigious institute for 14 years. At the symposium, Peter took the chance to reflect about the history of the geoGAZElab (initially called “Mobile Eye Tracking Lab”), how it all started, and the research contributions we have made so far. Slides of Peter’s short presentation are available here.