What an exciting way of starting into the new year!

Our Winter School on “Eye Tracking – Experimental Design, Implementation, and Analysis” took place in the second week of January on Monte Verità in Ascona, Switzerland. A total of 36 participants attended, with a large variety in terms of research background.

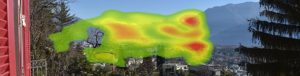

With her virtual keynote, Enkelejda Kasneci (TU Munich, Germany) opened the Winter School, in which she presented her research “On opportunities and challenges of eye tracking and machine learning for adaptive educational interfaces and classroom research”. Over the five days of the Winter School, participants learned about the different steps involved in performing eye tracking experiments, starting from experimental design, over data collection and processing, to statistical analysis (speakers: Nina Gehrer, University of Tübingen, Germany; Andrew Duchowski, Clemson University, S.C., US; Izabela and Krzysztof Krejtz, SWPS University of Social Sciences and Humanities, Poland). In hands-on sessions, participants designed and performed their own small eye tracking experiments.

The Winter School also enabled exchange between participants, through group work, poster presentations, and an excursion. The atmosphere of Monte Verità offered the perfect atmosphere and surroundings for this.

Thanks to all who have made this possible, especially our speakers and all sponsors!

Exciting news! The geoGAZElab will be participating in the MSCA Doctoral Network “Eyes for Interaction, Communication, and Understanding (

Exciting news! The geoGAZElab will be participating in the MSCA Doctoral Network “Eyes for Interaction, Communication, and Understanding (

Fabian Göbel has successfully completed his doctoral thesis on “Visual Attentive User Interfaces for Feature-Rich Environments”. The doctoral graduation has been approved by the Department conference in their last meeting. Congratulations, Fabian!

Fabian Göbel has successfully completed his doctoral thesis on “Visual Attentive User Interfaces for Feature-Rich Environments”. The doctoral graduation has been approved by the Department conference in their last meeting. Congratulations, Fabian!